TESTING

By Daniel R. DeMarco, Ph.D.

Guilty until Proven Innocent:

Presumption of Positivity and the Presumed Problem of Nonconfirmable Presumptives in Food Pathogen Diagnostics

Photo credit: Rost-9D/iStock / Getty Images Plus via Getty Images

There is a widely held belief that the rate of nonconfirmable presumptives (NCPs) in food pathogen diagnostics has increased significantly in recent years. This increase has been most closely associated with real-time polymerase chain reaction (PCR) testing. However, pathogen testing platforms based on other technologies (e.g., enzyme-linked immunosorbent assay/enzyme-linked fluorescent assay) have not been immune to this belief. The information necessary to determine the truth resides in the databases of third-party laboratories. However, the sheer volume of data and the quirks of the laboratory information management systems in which they reside have prevented assembly of a meaningful set of numbers that could answer the question definitively. Nevertheless, the belief is real, and the limited data that have been analyzed suggest that rates of NCPs are indeed on the rise. The question is, why? In the discussion that follows, I will attempt to answer that question (or show how it might be answered) in several different ways. The hope is that this approach will also help clarify why the answer is so important, and how it affects almost everyone involved in food production and/or food safety up to and including the consumer.

First, Some Clarifications

When we encounter a new or difficult concept, an analogy to a more familiar one can be helpful. To begin, I will use an analogy to gain perspective and help illuminate some of the contradictions inherent in the generally interchangeable terms “presumptive” and “presumptive positive” as they are used in food pathogen diagnostics today. The analogy is to the presumption of innocence, a cornerstone of American legal jurisprudence. Then I will address a number of items that are critical to framing and understanding the issue and what is at stake, including:

- Definitions of important terms, particularly “false positive” and “nonconfirmable presumptive”

- Uses of the term “presumptive positive”

- Hypotheses that purport to explain the (supposed) increase in NCPs.

I will close with some options to make the system work better for all stakeholders, including the consumers to whom we are responsible for ensuring safe food. The focus will be on the term “presumptive positive” as it relates to PCR/quantitative PCR (qPCR) results in food pathogen diagnostics, although the general principles are (mostly) applicable to other rapid methods and in other sectors (e.g., clinical diagnostics).

Understanding by Analogy: The Presumption of Positivity and the Presumption of Innocence

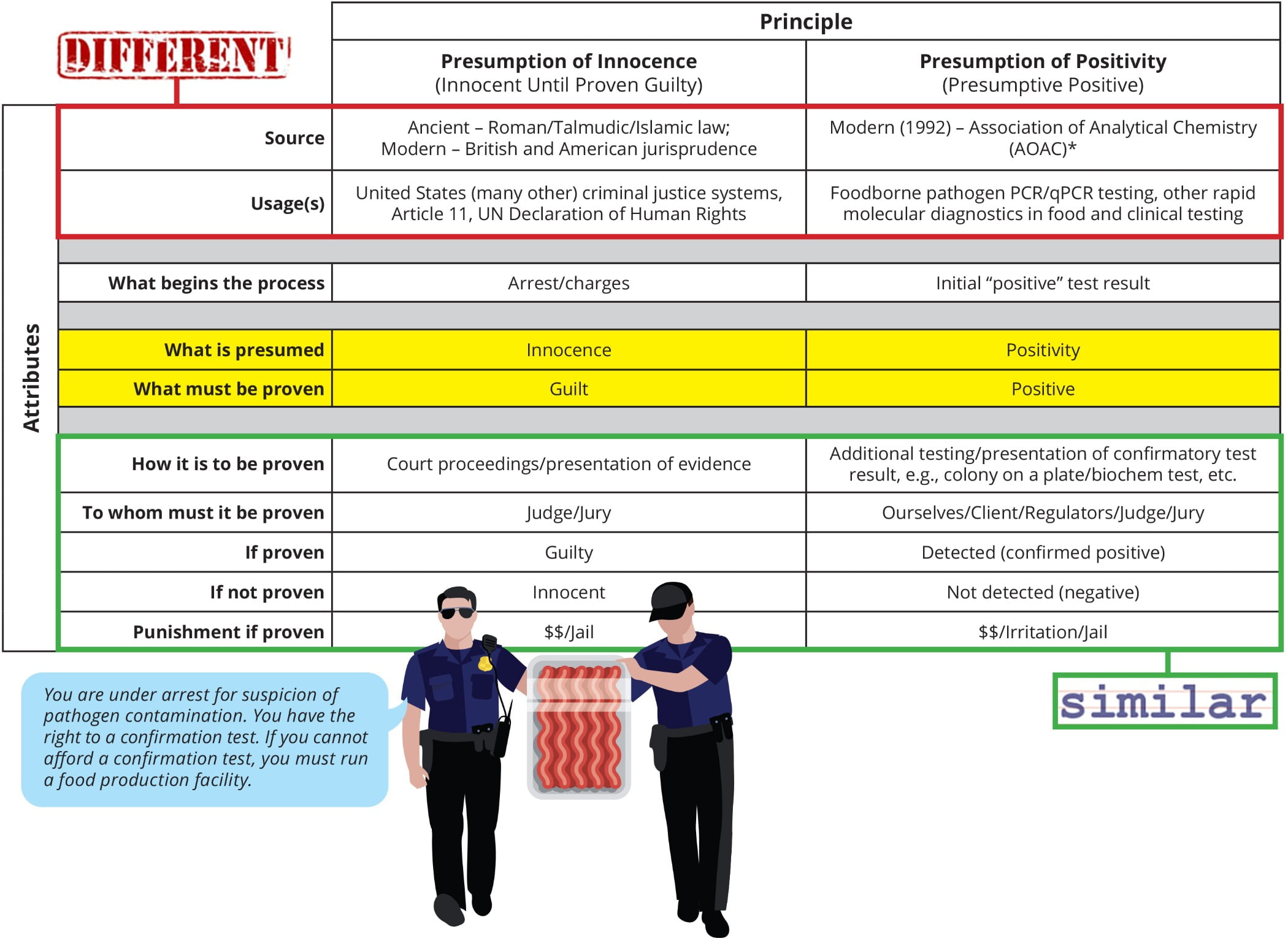

Figure 1 compares the subjects of my analogy. At first blush, they might appear dissimilar in the extreme. However, in the context of the process of arrest and trial, many similarities become apparent. The initial “positive” test result is analogous to the sample being arrested and charged. The charge of being positive is only presumptive and must be proven through confirmatory testing, usually by culture. The data from confirmatory testing become the evidence with which we adjudicate the “guilt” or “innocence” of the sample, that is, if confirmed positive, it is found guilty and reported as detected; if not, it is found innocent and reported as not detected. Rather than a courtroom with a judge and jury, the process takes place in the lab, and the judge and jury are all stakeholders in the final result. In both the courtroom and the court of the laboratory, the punishment for being found guilty can be significant and almost always includes financial penalties.

Where the analogy becomes most interesting is highlighted in yellow in Figure 1. In the courtroom, innocence is presumed, and guilt is what must be proven. In contrast, in the laboratory, we presume guilt (positivity). Another way to put this is that in the criminal justice system, we presume the opposite of what must be proven. In contrast, in PCR diagnostics, what we presume and what we must prove are the same. You might say, “But that’s just how we talk about it; we could just as easily say we are trying to prove the sample is negative.” But then why do we refer to/report the result as a “confirmed positive/detected” when it is proven as such by additional testing, but only as a negative or not detected when it is not? We don’t call it a confirmed negative. For purposes of the analogy, I will conveniently ignore the fact that it is logically impossible to prove something doesn’t exist, only that we can’t find it. It is as if we have turned the concept of the presumption of innocence on its head.

FIGURE 1. Presumption of Innocence vs. Presumption of Positivity

Another interesting way to think about this analogy is in the context of the protection of public safety. For example, after the arrest of a suspected serial killer, they are often remanded into custody with no chance of bail because of the need to protect public safety. In this case, the confirmation of a presumptive positive becomes completely analogous. We attempt to prove the “innocence” of the sample while protecting the public. We err on the side of caution, if you will. It’s just that we’ve substituted “presumptive” for “accused.” If the term were “suspected” positive instead of “presumptive” positive, there would be complete alignment.

If you follow this instructive analogy to its logical conclusion and agree with the usages of the two terms as shown in Figure 1, then you must accept that every time we call a sample a presumptive positive, we are committing a crime or at the very least denying due process to the sample. While it can sometimes feel this way, this is obviously a ridiculous conclusion, which serves to highlight the limitations of the analogy.

“No matter the cause, a presumptive result must be acted upon as if it were a true positive every single time.”

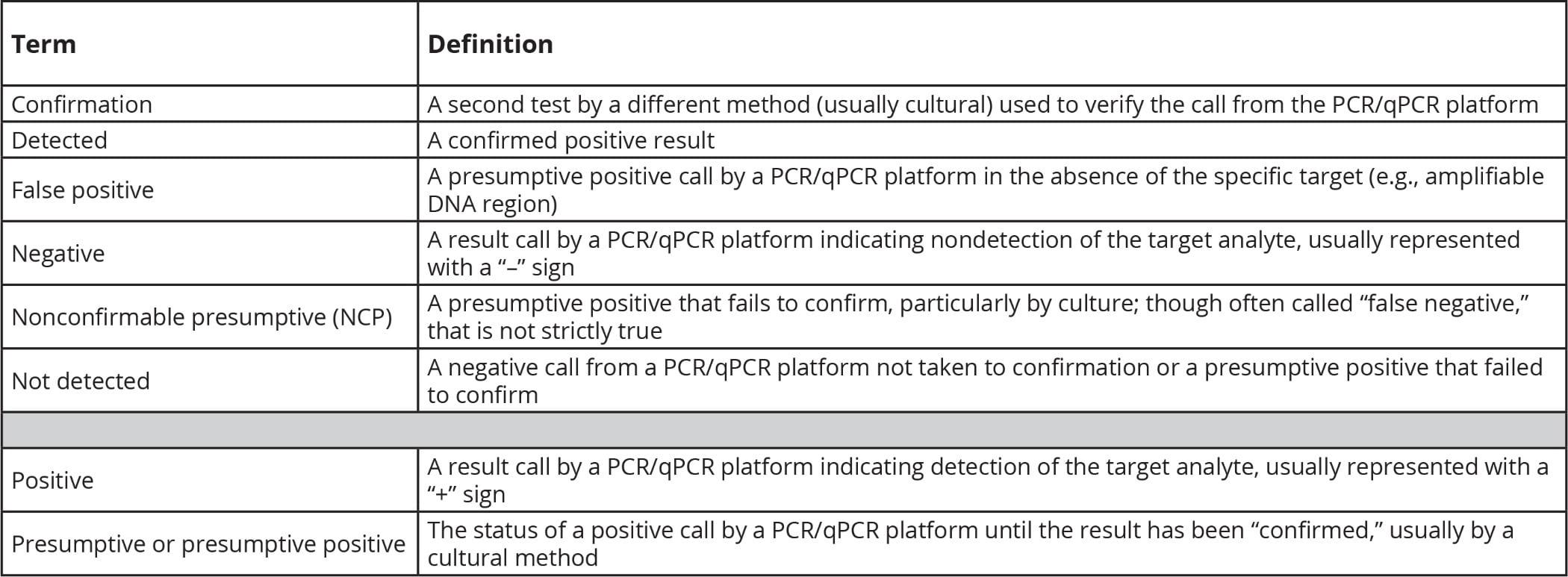

TABLE 1. Definitions of Important Terms Used in the Text

Defining the Terms: ”NCPs” vs. “False Positives”

Before we can begin to approach the question of why NCP positive rates are increasing, we need to define what we mean by several terms that are widely deployed in diagnostics. Table 1 lists these terms and my definitions for them.

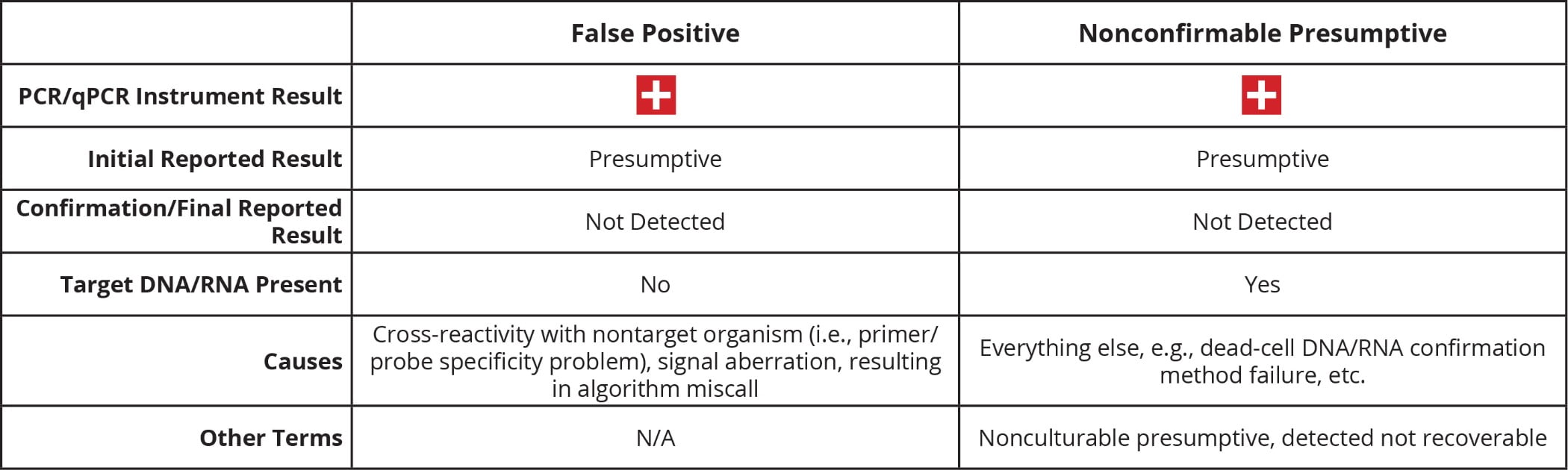

Two of the terms (“NCP” and “false positive”) are of the highest importance for this discussion (Table 2). The key message is that NCPs and false positives are not the same thing, although they are often conflated. The critical difference is that a true false positive can only occur without target DNA/RNA in the reaction. In those instances, the signal triggering the positive result is generated via the nonspecific amplification of DNA/RNA from a nontarget organism, or some physicochemical effect that causes an aberrant signal. No matter the cause, a presumptive result must be acted upon as if it were a true positive every single time. The only exception being the rare case of incontrovertible laboratory error. This requirement to act on the presumptive result is the cause of much frustration for all involved, especially when that initial presumptive cannot be confirmed.

TABLE 2. NCP vs. False Positive

Legitimate and Other Uses of the Term “Presumptive Positive”

Why exactly do we call a particular result presumptive? The reasons most often given can be broadly assigned into three main classes: legitimate, debatable, and other.

Legitimate

Performance: The method is considered a low-cost and/or rapid screen that eliminates the majority of negative samples, identifying “questionable” samples for more attention, or we have reason to question one or more performance characteristics of the method, that is, the sensitivity/specificity, robustness, etc. is believed to be less than optimal or differs from the “gold standard” (usually cultural) method, which is believed to be superior.

Required detection of multiple targets: When multiple targets must all be detected to indicate a positive result, the individual targets may be found in different organisms in the same enrichment (e.g., stx AND eae gene targets to find Shiga toxin-producing Escherichia coli).

“Defensibility” of results: The initial presumptive (positive) result may be due to artifacts of the analysis. Confirmation of a presumptive provides a more defensible result, which is required for regulatory action.

New/unknown science: The science behind the method and/or the target analyte is new and/or actively evolving, and we are still building experience of running the method in the “real world.” For example, in the early days of the pandemic, labs running the same method for SARS-CoV-2 detection [state departments of public health and the U.S. Centers for Disease Control and Prevention (CDC)] reported different results, with the states reporting results as presumptive while CDC reported them as confirmed.

Debatable

Misleading: A positive result would be misleading in terms of the actual risk the method is said to assess. For example, a method that detects DNA/RNA from dead cells may indicate risk where there is none. A secondary method should be used to “confirm” the presence of live cells. But how do we know that a negative confirmation is not caused by failure of the confirmation method? Further, the detection of DNA/RNA from a dead pathogen still indicates pathogens were present; this is arguably an indicator of some level of risk.

Other

Fear: Rather than trying to argue that a result is “only presumptive,” a better path is to have a clearly articulated rationale for testing and a plan for how to respond to the result, whatever it may be.

“Many competing hypotheses purport to explain the belief that NCPs are on the rise.”

Hypotheses to Explain the (Supposed) Increase in NCPs

Many competing hypotheses purport to explain the belief that NCPs are on the rise.

The first, and least popular, hypothesis says actually they are not. There is an overall increase in testing and corresponding increase in presumptives. It just “feels” like more NCPs, while in reality, confirmation rates have barely changed. The limited data analysis that has been done does not support this hypothesis.

A much-favored second hypothesis posits that PCR/qPCR methods are more sensitive than they used to be because modern PCR/qPCR chemistries are “better” than in the past. This hypothesis “feels” right. After all, in most other areas of technology, what exists today far outstrips what existed in the past. However, even though PCR methods today are extremely well optimized and can detect 1–10 copies per reaction, the same was true of the first PCR methods in food, and just as in the past, those 1–10 copies must actually make it into the reaction to be detected. While it may be part of the explanation in some specific cases, the differences in sensitivity between the most and least sensitive commercially available PCR methods are simply too small to account for the observed increase in NCPs.

A third hypothesis says that PCR test protocols are actually less sensitive than they used to be. In search of shorter time-to-result, PCR and qPCR methods have moved almost exclusively to the use of single-stage enrichments. Hence, the samples going into the reaction are less dilute, which increases the chances for detecting dead/nonviable DNA/RNA. A less dilute input sample may also decrease the chances of confirming a presumptive because the background flora are also less dilute and potentially see less-selective agents.

The final, and arguably most popular, hypothesis suggests that the increase in NCPs is due to the detection of DNA/RNA from dead, nonviable, or lethally injured cells. Dead cells cannot be cultured and hence cannot be confirmed. This hypothesis has great explanatory power and much supporting experimental evidence (e.g., pretreatment with enzymes or other chemicals to destroy or inactivate exogenous DNA results in a decrease in the number of presumptives). The question remains, however, as to why this might be different today from in the past. The possibilities include:

- Changes in sanitization practices may have resulted in more dead cells around to trigger presumptives.

- Changes in enrichment procedures may lead to higher concentrations of dead-cell DNA and background flora, making both presumptives more likely and confirmations more difficult.

- Increased use of probiotics and/or phage in pathogen control programs can complicate confirmation, and some phage products have been shown to trigger presumptive positive PCR/qPCR results.

Ways Forward

We need more research that further characterizes NCP enrichments. One approach might be microbiome analysis, either 16S pathogen-target specific or all bacteria. At the very least, this should allow for a definitive answer to the question of the presence of target pathogen DNA in NCP enrichments.

We also need improvements to culture confirmation methods for commercial, as opposed to regulatory, use. PCR “colony” confirmation of Salmonella from triple sugar iron agar slants and Listeria monocytogenes from confirmation plates is now a reality. This technique has reduced turnaround times (and arguably improved accuracy) but has had no impact on NCP rates.

“Big data” has come to dominate thinking in many fields but has had little impact on pathogen testing. What if we could use the massive amounts of digital data held by testing laboratories in a more predictive way? If we, or our computers, considered all information available about a given sample (e.g., matrix, source, historical results, time of year) before making a presumptive call, perhaps we would be more willing to act on that result.

This article is a mix of fact and opinion. Opinions given are my own and not necessarily endorsed or shared by my employer.

Daniel R. DeMarco, Ph.D., is the associate director of science at Eurofins Microbiology Laboratories.